LIU Yijiang (刘一茳), received Ph.D. at Nanjing University, focuses on research areas including on-device large models, model compression and acceleration, as well as software-hardware co-optimization design. He has published multiple papers in CCF-A international top-tier journals and conferences. His first-author paper (AAAI’25 Pruning-Aware Tuning) has been applied to Samsung’s on-device applications for smartphones and TVs. Additionally, his collaborative work with UC Berkeley (ICCV’23 Q-Diffusion) has been featured in MIT OpenCourse and adopted by NVIDIA’s TensorRT team for deployment.

刘一茳,南京大学博士,南京大学ISCL算法团队负责人,主要研究方向为端侧大模型的压缩、加速以及软硬件协同优化设计。他在CCF-A类国际顶级期刊和会议上发表多篇论文。其第一作者论文(AAAI’25 Pruning-Aware Tuning)已应用于三星手机和电视业务,与加州大学伯克利分校的合作成果(ICCV’23 Q-Diffusion)已被MIT公开课程收录,并被NVIDIA的TensorRT团队采用部署。

🔥🔥🔥 We are recruiting Master and Ph.D students! Please visit our Lab page for more details! 南京大学智能感知与通信实验室

🔥 News

- 2025.04: 🎉🎉 IJCAI 2025: FBQuant, a novel quantization method for LLMs.

- 2024.12: 🎉🎉 AAAI 2025: PAT, an efficient pruning technology for LLMs.

- 2024.02: 🎉🎉 CVPR 2024: Cloud-Device Collaborative.

- 2024.02: 🎉🎉 CVPR 2024: PromptCoT, a novel technology for enhancing T2I quality.

- 2024.04: 🎉🎉 Q-Diffusion is featured in the newest TensorRT post and MIT opencourses.

- 2023.08: 🎉🎉 Project: LLaMA-Accessory, an Open-source Toolkit for LLM Development.

- 2023.08: 🎉🎉 ICCV 2023: Q-Diffusion, a low-bit quantization technology for diffusion models.

- 2023.03: 🎉🎉 CVPR 2023: NoisyQuant, a novel quantization mothod for Vision Transformers.

📝 Publications

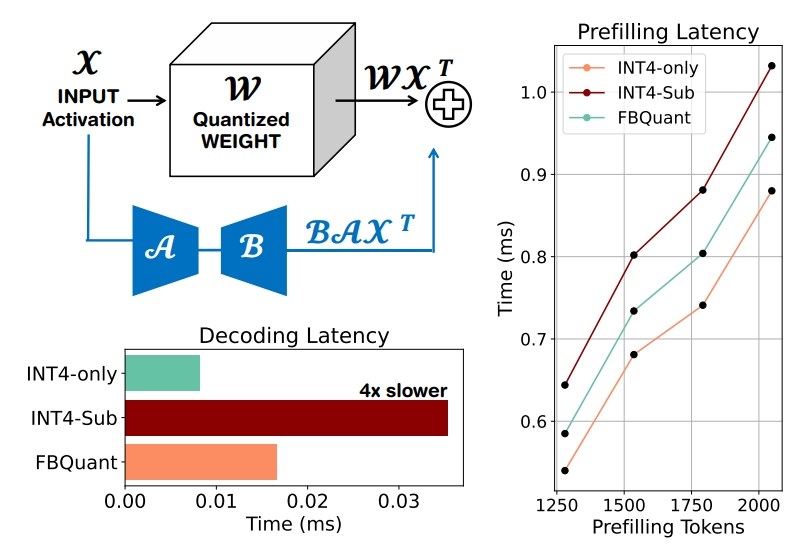

IJCAI 2025 FBQuant: FeedBack Quantization for Large Language Models

Yijiang Liu, Hengyu Fang, Liulu He, Rongyu Zhang, Yichuan Bai, Yuan Du, Li Du

- LLM quantization.

- Sub-branch approach.

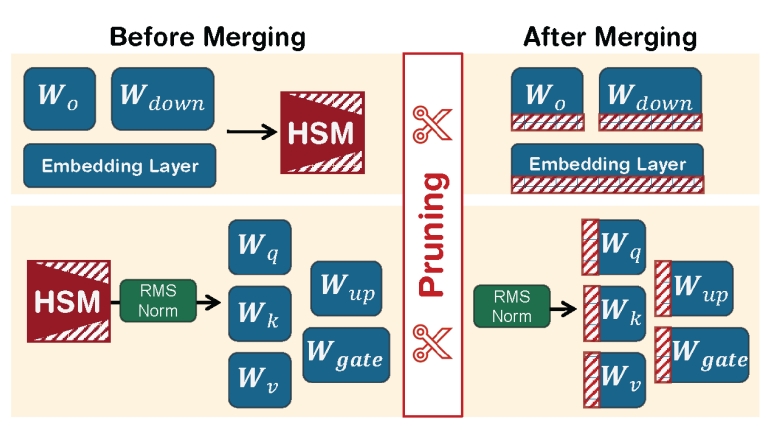

AAAI 2025 PAT: Pruning-Aware Tuning for Large Language Models

Yijiang Liu, Huanrui Yang, Youxin Chen, Rongyu Zhang, Miao Wang, Yuan Du, Li Du

- LLM structural pruning.

- Adaptation to downstream tasks.

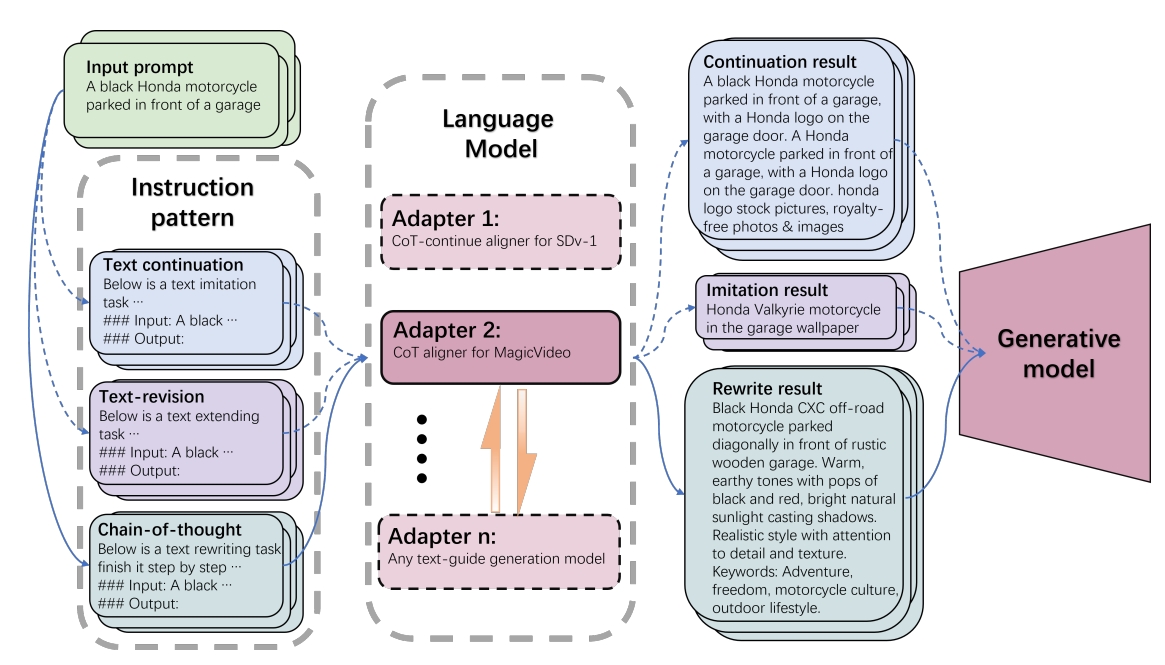

CVPR 2024 PromptCoT: Align Prompt Distribution via Adapted Chain-of-Thought

Yijiang Liu*, Junyi Yao*, Zhen Dong, Mingfei Guo, Helan Hu, Kurt Keutzer, Li Du, Daquan Zhou, Shanghang Zhang

- Enhance prompt quality by LLMs.

- Enhance T2I generation quality by better prompts.

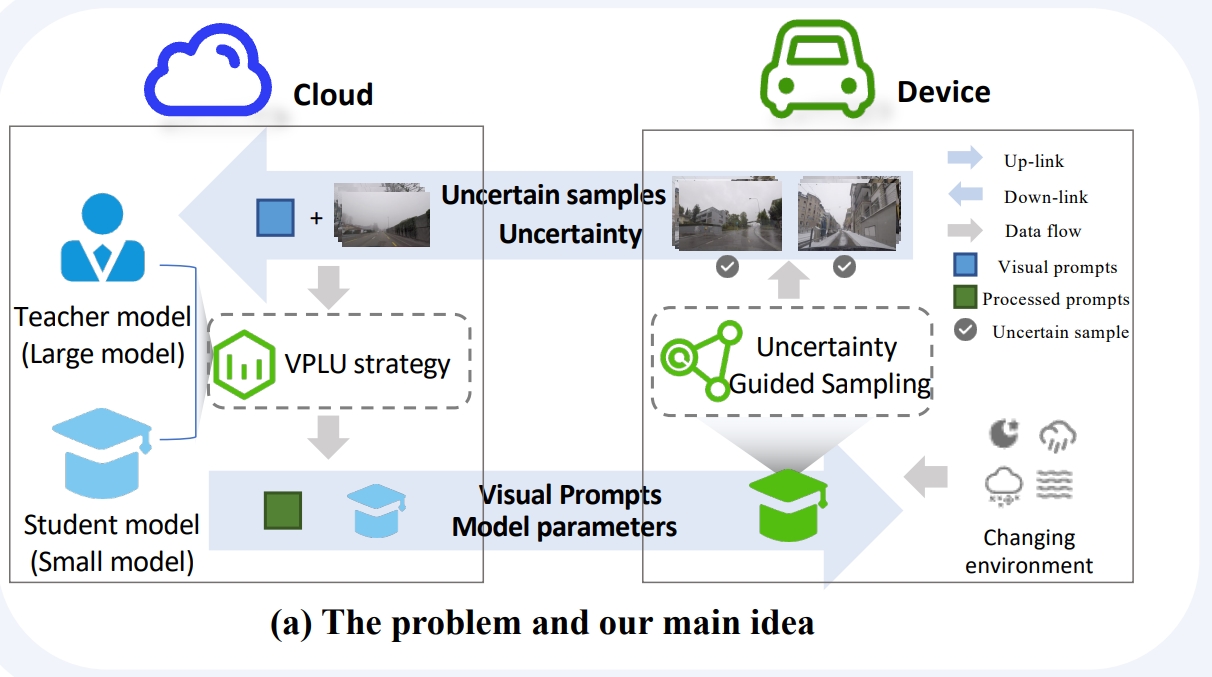

CVPR 2024 Cloud-Device Collaborative Learning for Multimodal Large Language Models

Guanqun Wang, Jiaming Liu, Chenxuan Li, Yuan Zhang, Junpeng Ma, Xinyu Wei, Kevin Zhang, Maurice Chong, Renrui Zhang, Yijiang Liu, Shanghang Zhang

- Improves compressed MLLMs on devices using cloud-based models.

- Efficient data transmission, knowledge distillation, and adaptive weight compression.

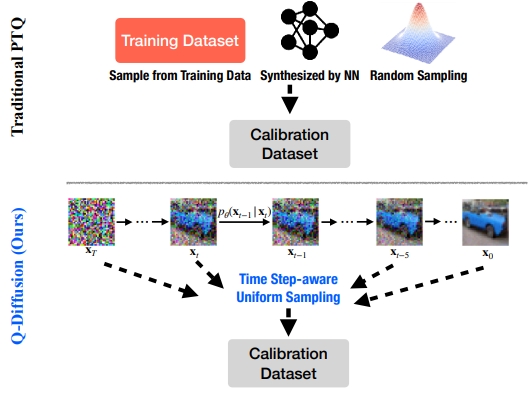

ICCV 2023 Q-diffusion: Quantizing diffusion models

Xiuyu Li, Yijiang Liu, Long Lian, Huanrui Yang, Zhen Dong, Daniel Kang, Shanghang Zhang, Kurt Keutzer

- PTQ method for diffusion models.

- Timestep-aware calibration and shortcut split methods.

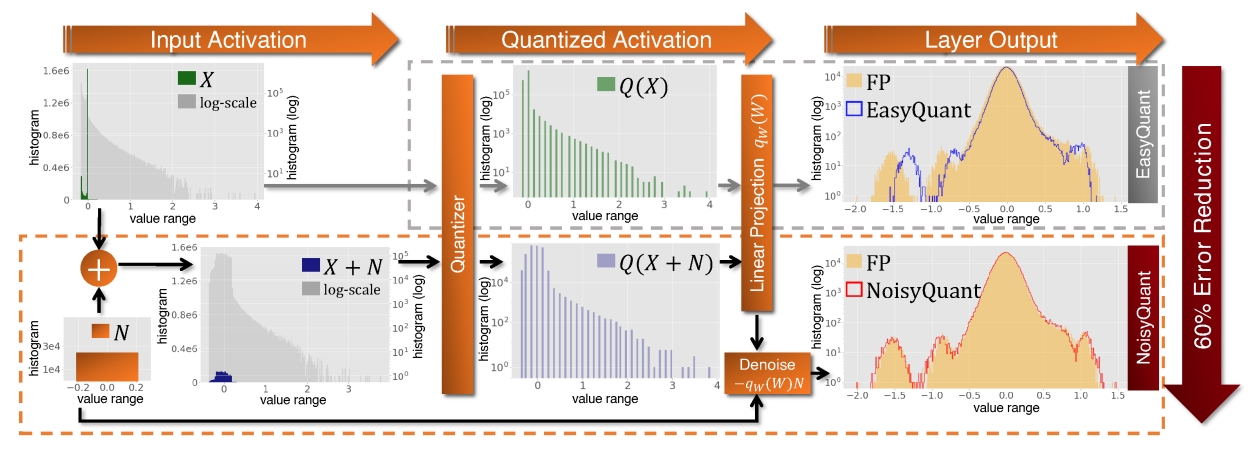

CVPR 2023 NoisyQuant: Noisy Bias-Enhanced Post-Training Activation Quantization for Vision Transformers

Yijiang Liu, Huanrui Yang, Zhen Dong, Kurt Keutzer, Li Du, Shanghang Zhang

- Using additive uniform noisy bias to actively reduce quantization error.

- Improving 6-bit quantization performance with minimal overhead.

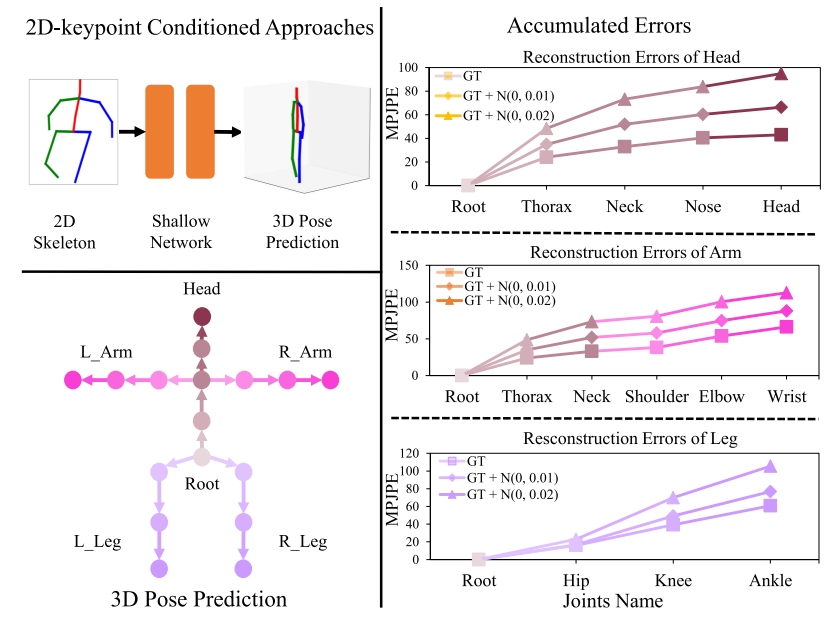

TIP 2022 Limb Pose Aware Networks for Monocular 3D Pose Estimation

Lele Wu, Zhenbo Yu, Yijiang Liu, Qingshan Liu

- Monocular 3D pose estimation.

- Kinematic constraints and a trajectory-aware approach to reduce estimation errors.

🎖 Services

- Reviewer for CVPR, AAAI, ICML, ACMMM, ICCV, NeurIPS, IJCAI, ICLR.

📖 Educations

- 2022.09 - now, Nanjing University, Ph.D

- 2008.09 - 2012.06, University of Edinburgh, M.S

- 2008.09 - 2012.06, Xidian University, B.S

💬 Invited Talks

- 2025.04, invited by Momenta at the 2025 Shanghai International Automobile Industry Exhibition.

💻 Visitings

- 2022.09 - 2024.12, Peking University.